Mumbai Press Center

February 14, 2024 | [ANALYSIS] Instagram Facilitating and Recommending Dissemination of Alleged Child Exploitation Content

Is the world set up to protect the dissemination of child exploitation content online?

At times it certainly feels like it.

Images and videos which show some of the darkest sides of humanity and some of the worst crimes imaginable, involving child abuse, torture and other forms of incomprehensible exploitation, are readily available on the dark web if you know where to look.

Child abuse and exploitation is a massive global problem with no easy solution, as having such content taken down off of the dark web is proving increasingly difficult.

The dark web requires Tor Browser or other similar software which connects to anonymous and hidden networks that are not available through regular web browsers designed for the surface web - the internet we use every day of our lives.

While having said content restricted to the dark web may seem like a good thing, unfortunately it also means that such content can thrive and be shared with a much smaller chance of being detected by unwanted eyes.

This unfortunately makes it harder for netizens and independent investigation teams to report such content as it usually takes a sophisticated and elaborate team of specially trained operatives working with government-sponsored intelligence agencies, with support from law enforcement, to be able to infiltrate and take down these networks.

These efforts usually require a lot of international collaboration too.

They are having some luck, however the content that is being found and taken down, and the individuals responsible being brought to justice, is minimal compared to the scale of the global problem of child trafficking, abuse and exploitation.

But what happens when the behavior breaches the depths of the dark web and creeps into the public domain?

It's reasonable to believe it would make it more easier to report it and have it taken down, right?

A recent and ongoing operation by Freedom Publishers Union, designed to weed out accounts on social networks sharing such content online, can reveal that is actually not the case.

The lack of cooperation and action by Instagram and Meta, its parent company, in taking down what we allege to be exploitation content, is both frustrating and disturbing.

We've been tracking an account on Instagram since December 2023 which openly engages in the dissemination of what we allege to be child pornography and other forms of child sexual exploitation content.

The account's disturbing pattern of behavior which involves frequent dumps of new content being posted to Instagram, has saw countless different individuals have their content shared through the account.

This is evident as the entirety of the account's content is not the same individual, rather involves many different individuals, notably all female and spreads across a range of ages.

Freedom Publishers Union does not allege that all of the content shared through the Instagram account is related to child exploitation, however we do allege that some of it is.

Our assessment has revealed what we believe to be children below the ages of 18 and 15.

While it is impossible to definitively conclude based solely on our observations, we believe some of the youngest girls in the images and videos could be as young as 12.

Some of the more explicit images include that of a completely topless young girl in the shower.

Also, there are multiple explicit and uncensored videos of females openly urinating.

Freedom Publishers Union first noticed the account through a recommendation on our shadow account used for ops, generated by the Instagram algorithm on December 2023 and we imediately started to monitor the account's activities.

Instagram appears to not only be facilitating the dissemination of child exploitation content, but is effectively encouraging its wider dissemination by recommending the content through its algorithm.

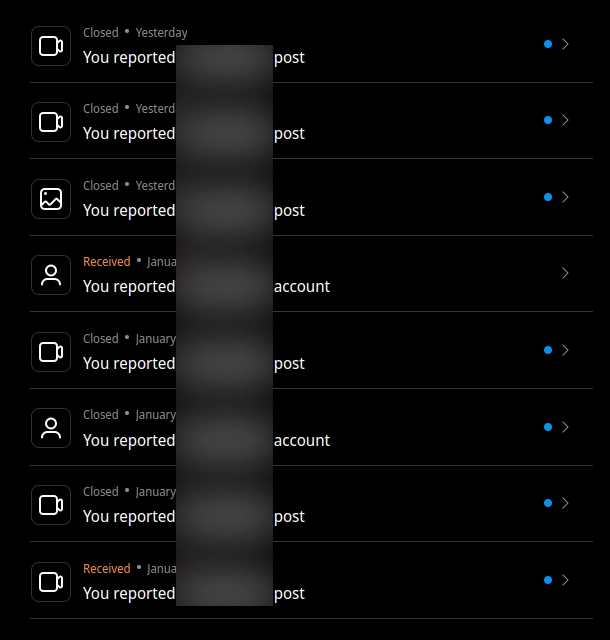

As part of our operation, Freedom Publishers Union has reported selected content and the account directly, to Instagram.

We have reported to Instagram 1 of the worst offending images and 5 of the worst offending videos which we believe not only indisputably violate the community usage guidelines of the Instagram platform, but puts the account at risk of infringing various laws through disseminating child exploitation content involving young and possibly underaged girls.

Instagram has so far ignored 5 of our reports for images and videos shared through the account, with 1 remaining open.

Instagram has also ignored 1 of the reports of the account, with the first report being closed with no feedback provided by Instagram and the second remaining open, albeit idle.

To our utter disbelief, all of the images and videos we reported remain online at the time of this story going to press.

The facts are obvious and cannot be disputed when the explicit images and videos are right in front of you, for all the see.

Freedom Publishers Union condemns Instagram and Meta for the lack of any action taken in response to our very serious reports.

There is nothing for Instagram to dispute and they must be called out for it.

What is equally upsetting is that we know this is just one of thousands of similar accounts which engages in this type of behavior and exploitation of young girls on the Instagram platform.

The problem is exacerbated by the lack of any action taken by Instagram and its parent company on legitimate reports.

In too many cases we see social network platform moderators (probable bots) respond to reports in a ridiculous and impossibly short amount of time.

This leaves little doubt it is the result of platforms favoring automated AI moderation tools over actual humans to handle reports by netizens.

Despite the many public pledges by big tech to rid social network platforms of such content, and all the hundreds of millions of dollars spent annually on investment into AI moderation tools and machine learning to combat the problem, in our view it's turned out to be a miserable failure.

Dissatisfied with the lack of any action being taken by Instagram, Freedom Publishers Union reported the account to the Australian Federal Police (AFP).

Our report was quickly dismissed by the AFP, rightfully, citing it was not a matter for them to investigate and were instead, ironically, referred to report it to Instagram or the eSafety Commissioner.

We reported the account to the eSafety Commissioner, as referred to by the AFP.

Although it was quickly dismissed again by the eSafety Commissioner, citing that despite the content being "not suitable for those aged under 18 years", they have no jurisdiction to act on content hosted outside of Australia (which is basically all big tech).

However, it was interesting to note the eSafety Commissioner did accept there was issues with the content, flagging it as "class 2 material", reassuring us we have a case to argue against Instagram.

During the investigation and compiling of this story, and as part of our ongoing operation, Freedom Publishers Union scraped the Instagram account and has archived its contents - all images and videos - and are fully prepared to provide assistance and a copy of the archive to law enforcement, if requested.

At the time we scraped the account we archived 16 images and 333 videos, bringing the total number of files to 349.

At the time of this story going to press, the account has expanded and we continue to monitor its activities.

For Freedom Publishers Union this remains an open investigation and we are continuing to press Instagram on the matter to understand how the content remains online and why reports are ignored and immediately being closed.

Asia/Pacific Press Office - Mumbai Press Center

Written by The Editorial Board.

Post-publication updates

Finally, some positive news!

— Reprobus, the ®Cicada 3301 🏴☠️👻 (@Cicada3301AU) July 1, 2024

An update to a story published to Freedom Publishers Union, on February 14, 2024.

-----

[ANALYSIS] Instagram Facilitating and Recommending Dissemination of Alleged Child Exploitation Contenthttps://t.co/BQYhAuZcH8

-----

Since this story was…